In this article, I want to go into really basic explanations of what Precision and Recall mean, I will refrain from getting into using the words True Positives, False Positives, True Negatives etc.; because although they are technically correct terms, they tend to get a bit overwhelming for the beginner.

Both Precision and Recall are metrics that are used to analyse a predictive model. We generally calculate these statistics over a validation or test dataset.

Recall

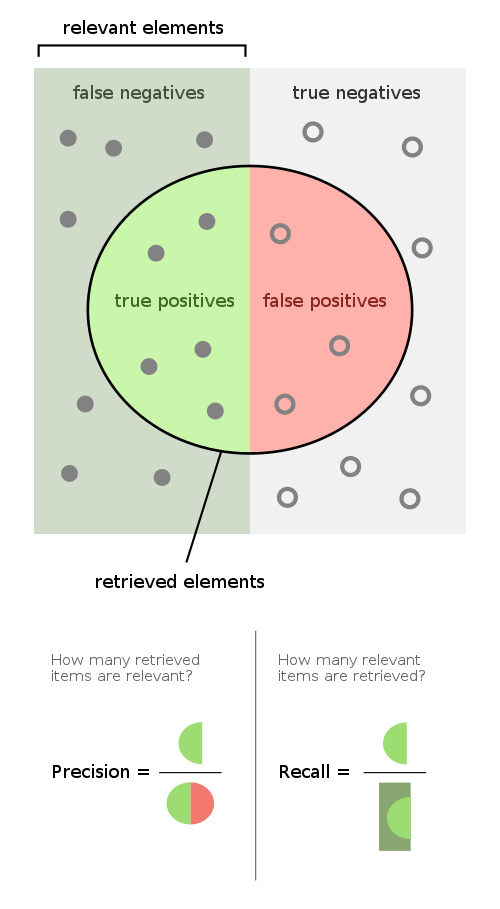

Recall is the Ratio of the correct predictions and the total number of correct items in the set. It is expressed as % of the total correct(positive) items correctly predicted by the model. In other words, recall indicates how good is the model at picking the correct items. So for a detector model, if you had 20 items and the model detected 10 items and they were correct, the recall is 50%. Had it detected all 20, you would have a detector with 100% recall.

Precision

Precision is measured over the total predictions of the model. It is the ratio between the correct predictions and the total predictions(so if it predicted 10 things and it turned to be wrong at one, the precision is 9/10). In other words, precis indicates how good is the model at whatever it predicted.

Example

Now an actual example involving both. Say we have a detection model. If you have 20 items and the model predicted 10 items. Out of these, 4 predictions were wrong and 6 were correct.

In this case, The precision is (6/10), ie 60% while the recall is (10/20) ie 50%.

A good image from Wikipedia to summarise this

References